Forget static flows and rigid personas. AI-powered products demand a new UX playbook one that’s generative, adaptive, and deeply personal.

Since 2017, we’ve helped companies like Accern, VT.com, and others move beyond buzzwords and build real AI experiences — ones that learn, guide, and respond in the moment.

Here’s how our AI innovation UX design process works and why designing for intelligence means ditching templates, thinking in use scenarios, and aligning three things: business needs, human behavior, and machine capability.

Key takeaways

- AI UX design begins with deep user research (not tech), uncovering unmet needs, behavior patterns, and real opportunities for AI to create impact.

- Technical discovery aligns business goals, existing infrastructure, and AI capabilities to ensure feasibility, scalability, and long-term value from the start.

- Instead of fixed flows, we design flexible, AI-powered interfaces that adapt dynamically to use scenarios, roles, and real-time context.

- User trust is earned through clear communication of AI confidence, contextual clarity, and transparent control over system behavior and data sources.

- AI transforms how we design but never replaces the human insight, empathy, and strategic thinking that define world-class user experiences.

Step one. User research

Every successful AI strategy starts with a human question: Who are we really building for and why now?

Before diving into AI strategies and tools, machine learning models, or any emerging technologies, we begin with user research. Because without a deep understanding of your customers, even the most powerful AI capabilities can miss the mark.

We guide clients through AI innovation workshops to uncover actionable recommendations grounded in real customer behavior. This phase is where organizations gain the clarity to move forward with purpose. We explore essential questions:

- Who are our customers today and tomorrow?

- What are their unmet needs, habits, and motivations?

- What metric defines success — customer experience, operational efficiency, or revenue growth?

- What kind of transformation are we expecting after we implement AI?

The answers shape everything that follows. At this stage, we collect and analyze data to surface patterns, frustrations, and opportunities. We map job stories and build customer journey maps to visualize how people move through their workflows, what slows them down, and where the product can make the biggest impact.

This is especially important in sectors like manufacturing, supply chain, healthcare, and drug discovery, where small changes in user behavior can streamline processes and unlock massive growth opportunities. Understanding how AI technologies can support and enhance customer experiences (rather than just automate tasks) is what separates successful innovations from forgettable experiments.

We’re not talking about features yet. And we’re definitely not talking about LLMs or models for the sake of it. At this point, companies must resist the urge to “implement AI” without a verified user need. Our role is to identify the real-life challenges your customers face and whether AI innovation is the right lens to solve them.

With user needs defined, we then explore the market: competitor research, best practices across industries, and verification of current AI solutions. We ask:

- Where are others succeeding?

- Which AI tools are gaining traction and why?

- What gaps still exist between what companies offer and what customers expect?

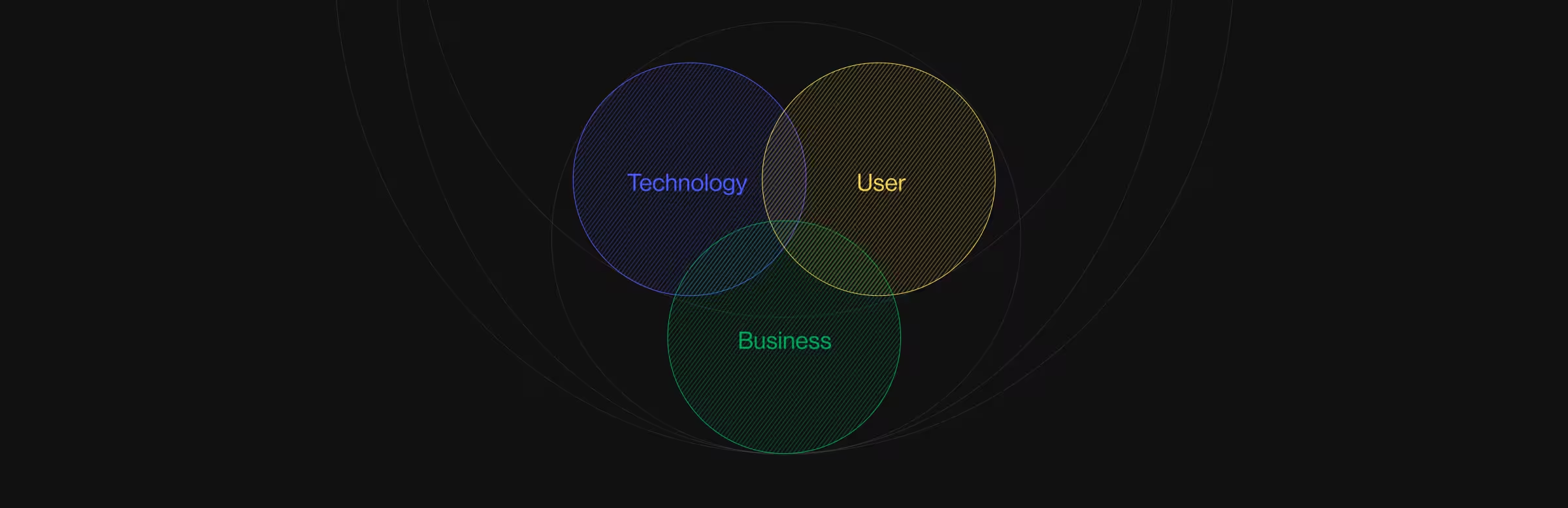

We anchor this entire process in Don Norman’s product design pillars: technology, user, and business. But we don’t lead with the tech. We first validate the business opportunity and customer pain points. Only then do we layer in the right AI capabilities to scale your product intelligently and responsibly.

Step two. Technical discovery & ideation

After we deeply understand customer needs and define opportunities for AI innovation, we shift gears into technical discovery — a faster but no less critical phase. Here, we stress-test ideas against real-world feasibility, available AI technologies, and your team's existing infrastructure.

This is where AI strategy becomes tangible. We ask:

- What AI capabilities and expertise already exist within the company?

- What’s the budget for development, data acquisition, and model training?

- Should we fine-tune a large language model or build a custom one in-house?

- Do we have proprietary data or a plan to source it?

- Which AI tools and frameworks (e.g., NLP, machine learning, computer vision) are accessible, secure, and cost-effective?

- How will we address data governance, verification, and privacy?

This is also where we explore how AI can be used to enhance customer experiences, uncover growth opportunities, and streamline operations.

With guardrails in place, we co-create with dev teams in focused ideation sessions. These aren't whiteboard marathons. They're structured, cross-functional working sessions that translate customer problems into scalable, AI-powered solutions. Our goal is to create something technically sound, strategically relevant, and aligned with both budget and business objectives.

Real-world examples of technical discovery at work

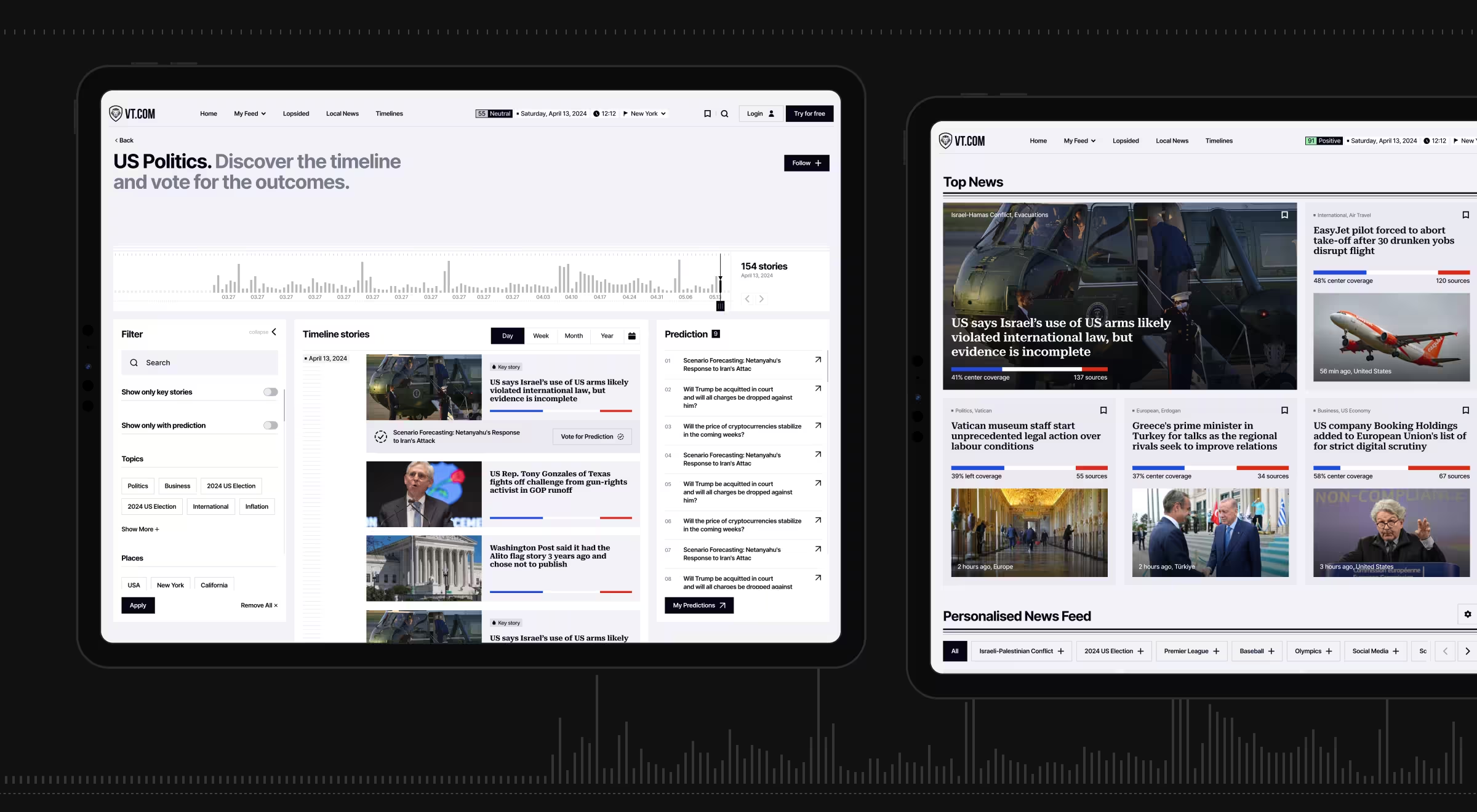

1. VT.com: using AI to reinvent transparent journalism

This media startup came to us wanting to analyze bias in the news using AI. Through rigorous research, we saw a broader opportunity: create a platform that detects, verifies, and summarizes news across multiple sources.

We trained the system using NLP and machine learning to spot bias patterns and flag inconsistencies. This solution addresses content integrity and meets the rising demand for fact-based journalism in a chaotic media landscape.

🔍 Read full case study here.

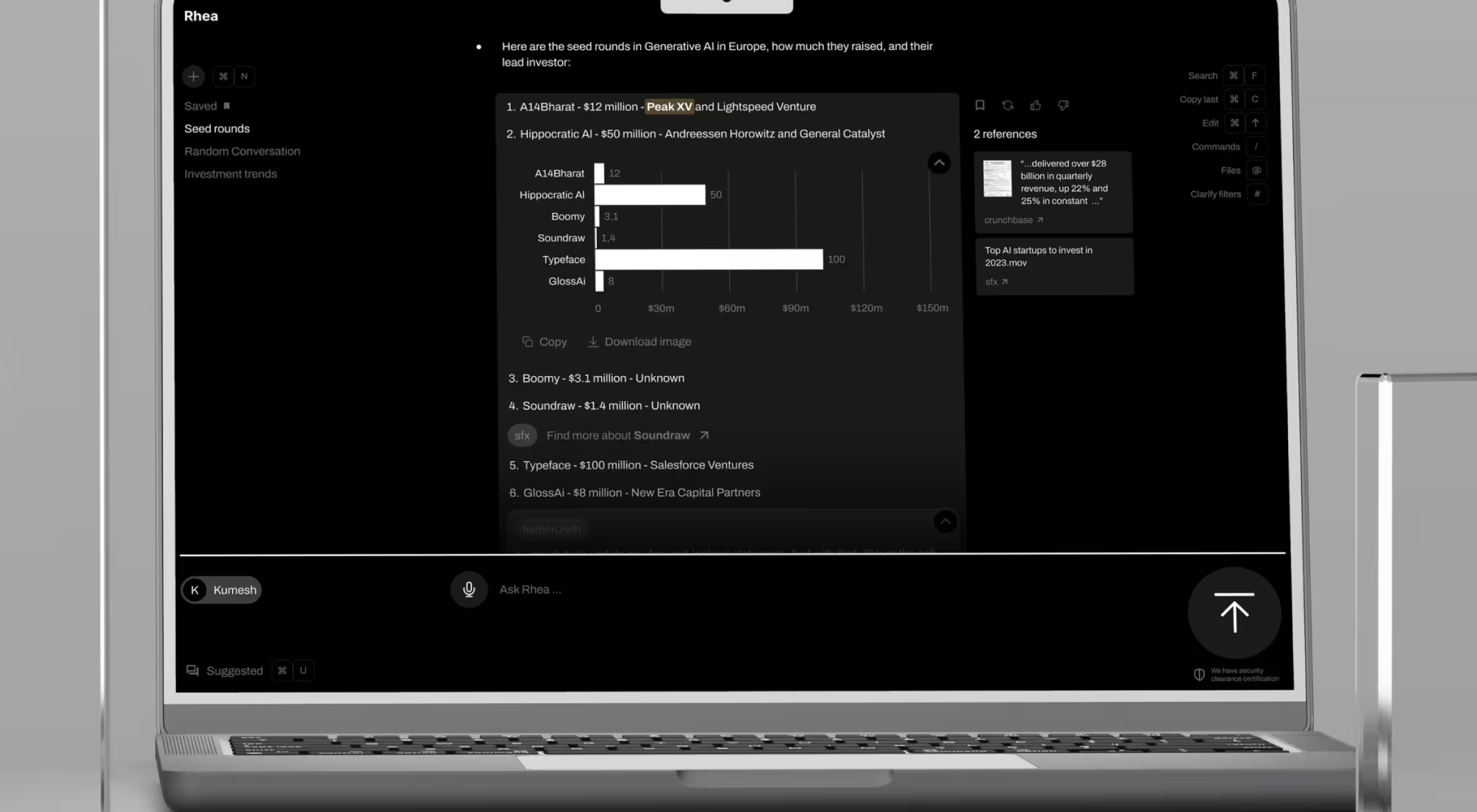

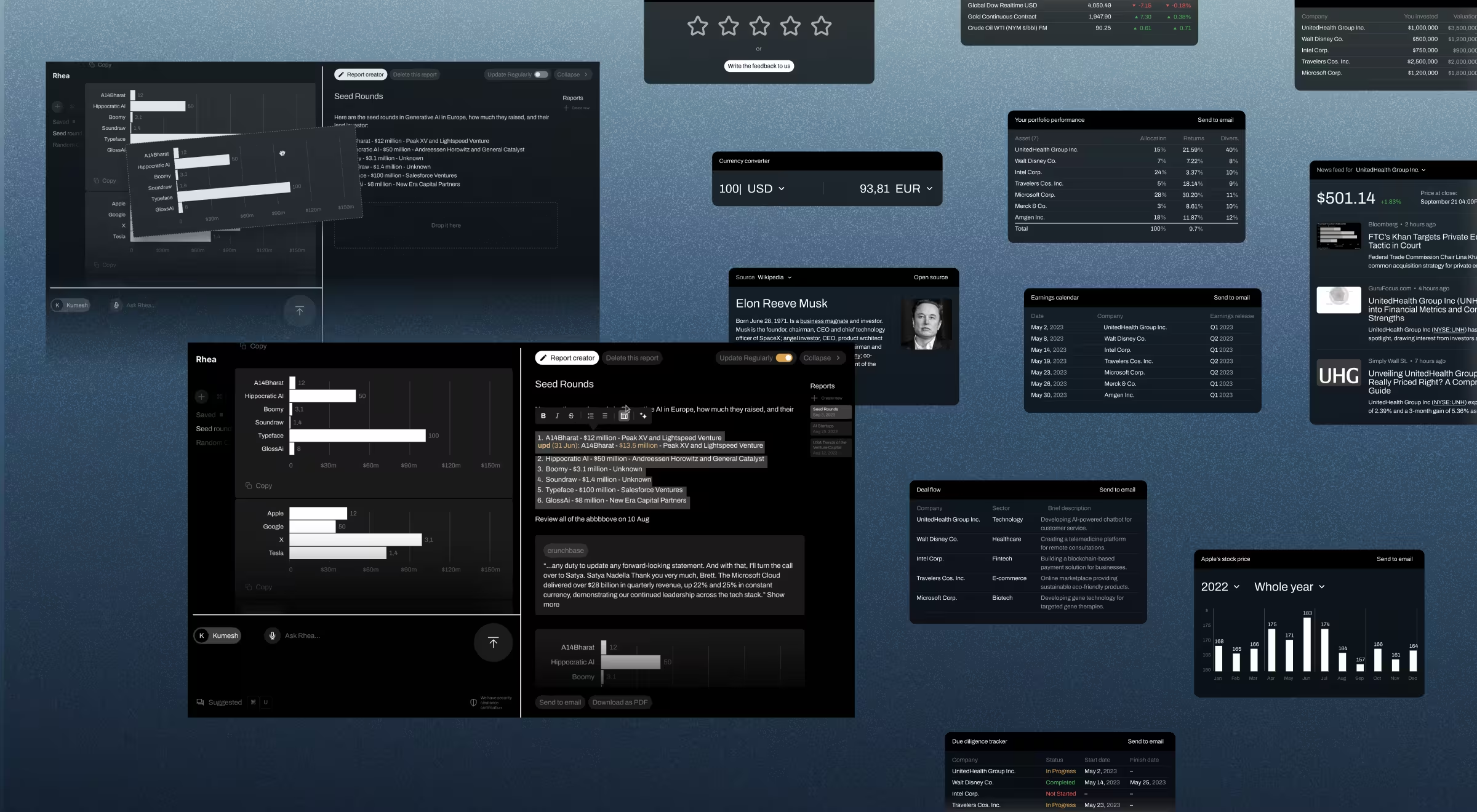

2. Accern’s Rhea: FinTech meets machine learning

In FinTech, speed + accuracy = competitive edge. Our technical discovery for Rhea revealed an untapped goldmine: 10+ years of proprietary financial data.

By training a custom model on this dataset, we powered features like market trend prediction and AI-assisted decision-making. We also developed a modular design system where interface widgets dynamically respond to user prompts turning static dashboards into AI-driven financial assistants. That’s how we enhanced workflows for analysts while reducing noise.

🔍 Read full case study here.

3. Legal research assistant: from UI to AI-powered legal strategy

In legal tech, compliance is everything. Our client wanted a smarter UX to support in-house legal teams. We went further.

Once we identified that the client had access to well-structured, high-quality legal reports, we built a machine learning model that analyzes a company’s profile and outputs legally accurate, industry-aligned reports.

What started as “a better UX” became a fully AI-integrated platform — one that reduces manual research, ensures consistency, and scales across teams.

Across all industries — media, fintech, legal, and beyond — technical discovery bridges the gap between what customers want and what AI can deliver. It’s where AI innovation becomes execution-ready.

This step transforms ambition into clarity, laying the groundwork for AI products that scale, evolve, and lead.

Step three. Use scenarios & feasibility testing

Traditional UX starts with fixed layouts and static flows. But when you're designing AI-powered products, that playbook doesn’t work. Instead of locking in features too early, we build for flexibility anticipating a range of user scenarios, contextual triggers, and industry-specific needs.

At this stage, we explore how AI capabilities can meet customer needs across multiple paths. We design dynamic use cases and interface behaviors that adapt in real time based on input, environment, and user intent. Our goal is to create human-centered, AI-enhanced experiences that feel intelligent.

From static wireframes to dynamic interfaces

Instead of designing one fixed flow, we prototype dozens powered by AI tools that automate wireframe generation and allow designers to streamline processes, generate variations fast, and focus on strategic decisions.

Using contextual data like time of day, location, and past behavior, we create smart interfaces built on modular design systems. These interfaces adapt via simple logic or complex machine learning models, depending on the product’s goals.

OLD vs. NEW: understanding user intent

Before AI:

Search engines returned results based on keywords, semantics, and SEO signals. Users waded through cluttered results, links, and ads. It was technically efficient but emotionally disconnected. Think: an algorithmic genie that follows commands without understanding meaning.

With AI innovation:

We now decode real user intent using natural language processing (NLP), sentiment analysis, and context recognition. Instead of throwing data at users, we serve pre-trained, hyper-personalized solutions. This elevates both efficiency and customer experience, driving long-term engagement.

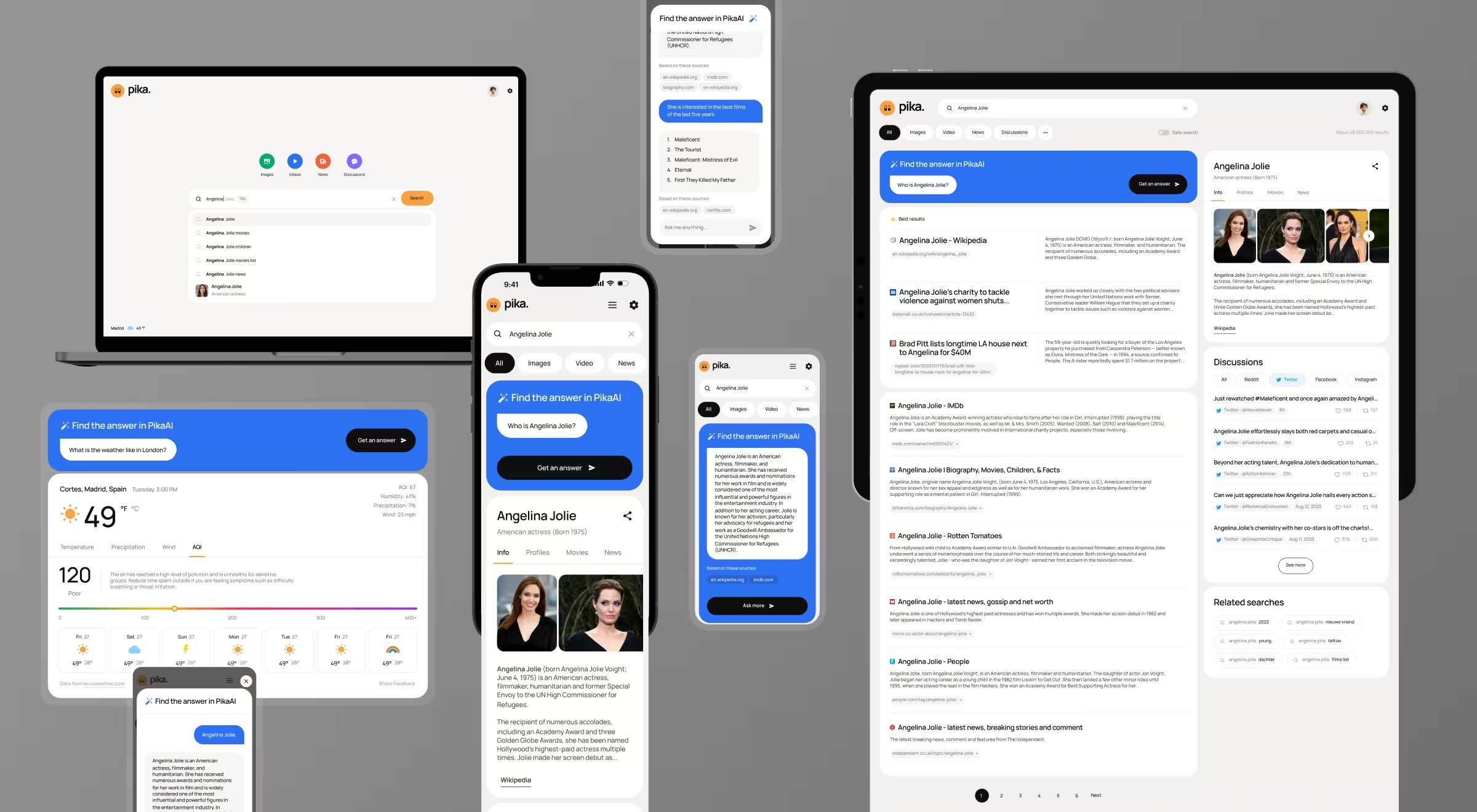

Use case: Pika AI – search engine, reinvented

In our Pika AI project, we built a search engine that doesn’t just respond to queries but also understands them. Ask, “best places to eat near me,” and the AI classifies it as a planning scenario, drills into subcategories (like hobbies or outdoor plans), and filters results based on real-time context: weather, pricing, safety alerts (e.g., wildfires), and even mood. This way, we gain fully personalized micro-itinerary generated in seconds.

Now scale that. A different query — like one for logistics or business travel — leads to a completely different experience. The UI rebuilds itself dynamically, ensuring relevance across diverse industries and user types.

Use case: Rhea – financial research with AI lenses

For Rhea, our AI-driven FinTech platform project, we designed modular widgets tied directly to financial analysts' workflows:

- Market & region evaluation

- Peer comparison & trend forecasting

- Product & startup potential analysis

- Data-backed decision-making & communication

Each widget maps to a real-world task. And with Rhea 2.0, we introduced “Lenses” — specialized modes for Venture Capital, Equity Research, and Product Analysis. Powered by LLMs trained on domain-specific datasets, these Lenses ensure every user gets a tailored experience aligned with their role, strategy, and performance goals.

Use case: Suits AI – the role-based productivity assistant

We applied the same logic to Suits AI, a mobile virtual assistant designed for professionals. Using role-specific language models, Suits delivers support across multiple domains:

- Business Assistance

- Product Design

- Marketing Research

- Sales Enablement

Each function operates with its own predefined rules and AI-powered flows, boosting productivity while reducing mental friction. This level of personalization at scale is only possible with a tightly integrated AI UX framework.

Feasibility testing: bridging AI innovation with reality

Once we map use cases and prototype flows, we partner with dev teams to run feasibility testing and proofs of concept. Here’s where we answer the hard questions:

- Can the system reliably handle the use scenario at scale?

- Do the models meet expectations across data types and edge cases?

- Is the solution viable within current budget, data availability, and security requirements?

If the answer is no, we pivot. We explore alternate AI technologies, retrain models, or reframe the use case. Innovation isn’t about forcing tech into a product. It’s about building something that performs in the real world.

This step turns abstract AI concepts into validated design artifacts. It ensures that what we create not only meets customer expectations, but also aligns with your organization’s resources, operations, and long-term strategy.

Step four. Identifying user challenges within the AI interface

Even the most powerful AI technologies can fail if users don’t trust them. That’s why in this phase, we focus on making AI-powered interfaces safe, clear, and comfortable to use.

It’s not enough for the system to be capable. It has to feel helpful. That means identifying and addressing the friction points that arise when real people interact with complex systems powered by machine learning, natural language processing, and large language models.

Key usability challenges we solve:

- How do we communicate the model’s confidence level?

- Can we show the sources or references used?

- How do we overcome the articulation barrier (when users don’t know what to ask)?

- How do we leverage context to improve output?

- How do we reduce the risk of mismatched or irrelevant responses?

- How can we increase accuracy and transparency without adding complexity?

Do not consider these questions as hypothetical ones. They’re daily design challenges when building AI-powered products at scale.

So, what are the exact challenges?

✅ 1. Overcoming the articulation barrier

One of the biggest blockers in AI-powered experiences is the moment when a user knows what they want but doesn’t know how to ask.

To bridge this human-AI gap, we introduce clarifying questions driven by contextual data. In Rhea, the system dynamically prompts users with helpful follow-ups based on tags, prior behavior, and query type. This turns a dead-end query into a meaningful, personalized conversation reducing friction and enhancing customer experience.

This approach also makes the interface feel intelligent and collaborative.

✅ 2. Communicating confidence and building trust

Users need to know: Where is this answer coming from? Can I trust it?

In Rhea, we visualize the number of sources used to generate each insight. The interface shows real-time verification data: how many documents were pulled, which sources were referenced, and how fresh the data is. This level of transparency transforms abstract AI outputs into actionable, defensible recommendations.

In legal tech projects, we push this even further highlighting the exact document snippets used in chatbot responses. These are pulled from a memory buffer that users can inspect, manage, and even modify.

✅ 3. Empowering users with control over AI context

We believe AI should adapt to the user — not the other way around.

In our legal platform, we gave users the power to adjust the AI model’s memory buffer essentially fine-tuning the context window. This means users can control which documents, conversations, or data sources the AI references in real time.

Why it matters:

This is strategic alignment. By giving users more control over context, we help them drive better outcomes with fewer inputs, which is essential in industries like law, healthcare, and finance where accuracy, compliance, and personalization intersect.

Designing for the final goal

Here’s where most AI products stumble: they make users do the work to get the value.

We take the opposite approach. We automate away every possible step that doesn’t serve the user’s final objective whether that’s making an investment decision, generating a legal report, or booking a meeting.

By identifying friction points and re-engineering them with AI-powered logic, we remove cognitive load and let users focus on the outcome.

So, AI innovation is not about building cool tools. It’s about designing human-centric systems that solve real problems. At this step, we zoom in on the user's emotional and practical experience because trust, control, and clarity are non-negotiable in the new era of AI-driven design.

This step ensures your product earns trust, drives action, and scales intelligently across industries and use cases.

Step five. UI design, modular system & usability testing

This is where the AI-powered product finally takes shape.

We shift from exploration to execution building out real interfaces grounded in user needs, enriched with AI capabilities, and structured through a dynamic design system that adapts as users interact with it.

But instead of locking into static templates, we design flexible systems that evolve.

How to design for a new era of AI-driven interfaces

Our approach blends traditional interface design with the power of generative AI and machine learning. While UI scripting and visual refinement are still essential, the role of the designer expands: now they teach the AI how to build.

We start by developing a modular design system — a flexible library of widgets, components, and interaction rules trained to respond to specific use scenarios. Each widget is mapped to a user goal, and the system dynamically assembles layouts based on context, user behavior, and business logic.

Think of it as a self-building interface. No more pre-made layouts or rigid screens. Instead, the UI regenerates itself for each task, each role, each moment driven by AI tools, contextual data, and predictive design patterns.

The UX designer’s new role is AI educator

In this model, the UX designer is no longer just shaping pixels, they’re shaping intelligence. Their job is to:

- Define how components relate to real-world customer needs

- Map interactions between widgets and AI prompts

- Train the system on how to respond to intent, priority, and constraints

- Ensure outputs are intuitive, actionable, and contextually appropriate

This is where AI innovation becomes a design discipline. And where real growth opportunities emerge through interfaces that scale and adapt in real time.

Testing for real-world scalability

As the system becomes more intelligent, usability testing shifts from static validation to scenario-based stress testing. We run modular prototypes through high-pressure simulations measuring:

- Flexibility across tasks and industries

- Accuracy of outputs generated by the AI

- Speed of user task completion

- Comfort and trust levels across personas

From supply chain dashboards to healthcare apps, the goal remains the same: build UI systems that work for people, not just screens.

Step six. Onboarding, final testing & handoff

A great AI product doesn’t stop at a smart interface. It teaches users how to use it confidently.

This final step is about polishing the product experience with refined onboarding, responsive support systems, and intuitive guidance baked into the design. We prioritize:

- Clear user education on how the AI works

- Constant access to help, documentation, and system feedback

- Transparency and traceability of AI actions and decisions

- User control over key settings like memory buffers, filters, or customization options

Every interaction is tested to ensure it aligns with the user’s goal and mental model. And every piece of the system (from search to support) is designed to streamline processes, not slow them down.

With extensive validation behind us, we move into the handoff equipping your team with everything needed to deploy, monitor, and evolve the product:

- A fully documented, modular design system

- Front-end specs aligned with AI model behaviors

- Test coverage across scenarios and edge cases

- Long-term recommendations for AI training, data pipeline management, and feature evolution

We open a new chapter where your organization owns a living, learning product designed to adapt with demand, scale with complexity, and support your business growth in the AI-driven future.

How AI is used in UX design for AI products

Throughout this article, we’ve walked through Lazarev.agency’s unconventional yet proven process for designing AI-powered user experiences — one that merges deep user understanding with AI technologies.

But let’s address the big question: Can AI fully replace UX designers?

Not yet, and likely not ever. Instead, we see AI as a strategic partner in the design process. When used right, it enhances creativity, accelerates workflows, and supports human-led innovation at scale.

Where AI brings real value to UX design

AI tools are becoming foundational to modern UX workflows. At Lazarev.agency, we leverage a wide range of AI capabilities across the UX lifecycle:

- AI-powered design assistance in tools like Figma (layer organization, vector generation)

- Smart image editing in Photoshop with context-aware generation

- Research support via ChatGPT and other NLP tools for clustering and summarizing interview data

- Automated testing and behavior tracking using machine learning models

- Generative UX patterns that adapt layouts based on real-time user context

These AI tools help analyze massive volumes of data, streamline operations, and generate rapid design iterations, all while freeing up designers to focus on strategic and human-centric challenges.

Key benefits of using AI in UX design

Here’s how businesses and designers gain when AI is integrated into the UX process:

✅ Streamlined processes

AI automates repetitive tasks like wireframing, layout generation, and content tagging making design faster and more agile.

✅ Deeper insights into customer needs

AI helps parse large user datasets to uncover hidden behavior patterns and pain points across industries and personas.

✅ Personalization at scale

By leveraging machine learning and user context, we design experiences that adapt dynamically to individual users. Essential for sectors like healthcare, manufacturing, finance, and ecommerce.

✅ Smarter collaboration and decision-making

With data-backed insights and actionable recommendations, AI helps bridge the gap between design, product, and engineering teams.

✅ Enhanced accessibility

AI innovations like voice recognition, automatic contrast adjustment, and intent detection improve inclusivity for users with diverse needs.

Why human expertise still matters

AI brings power but it lacks nuance.

Truly exceptional design still starts with empathy, strategy, and deep human insight. Understanding emotion, cultural context, brand voice, and edge-case scenarios requires human designers who can zoom out, connect the dots, and design for real people.

The creative direction, research framing, and emotional intelligence that humans bring to the table remain irreplaceable. As AI evolves, human UX designers evolve too becoming orchestrators of adaptive, intelligent systems.

Final take

Welcome to the new era of user experience.

In a world where every company is racing to build custom AI models, it's not just about the data anymore. The real competitive edge lies in how you design the AI experience itself — how well it understands, adapts, and supports your users at scale.

Say goodbye to static personas and fixed layouts. The future belongs to modular, intelligent systems that evolve with every interaction. But to get there, you’ll need more than great tools. You’ll need an AI strategy rooted in UX psychology and business logic.

So, what now?

👉 Invest in UX research that sets the foundation for hyper-personalized, scalable experiences.

👉 Adopt modular design systems powered by AI tools that align with your organization’s needs.

👉 And if you’re ready to move fast and design smarter, partner with an AI UX agency Lazarev.agency. We help companies turn AI from an abstract promise into a product users trust, love, and rely on.

.webp)